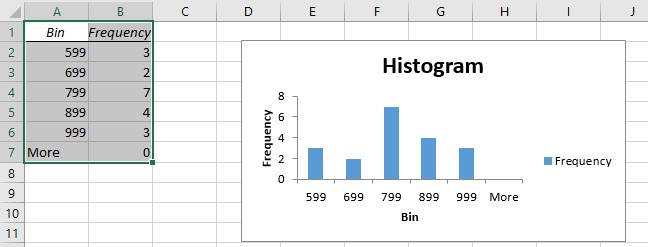

So, with that being explained here is the small calculation: //sum of sales per order id However, when we derive the bins from sales directly, we would see the order id in two different bins, being 2,700 and 600 respectively. The next column shows that when we use our level of detail calculation, both products belong to the same bin of 3,300 which resembles the overall value of the order. Since each product has its individual sales value attributed and we will be doing the binning by the sales that means that an individual order number with two products would possibly occur in two bins which is not what we want. Since we will be using sample superstore we need to do some minor prep works meaning we need to write a small fixed level of detail calculation to account for the fact that each order can consist of multiple products. if Order 1 had a total sales value of 100, order 2 hat sales of 200 and our bin size is 100, then we would see 1 in each of the two bins. making your histogram self-adjustingįor this exercise, we want to create a histogram that gives us the number of orders binned by corresponding sales value.

Maybe not the best user experience we can offer. You might use a parameter and the user will have to readjust manually every time they filter but that is a) one additional step, b) the user would have to play around with the parameter until the bin sizes have been set in such a way that they become readable again. On a dashboard that might lead to bad user experience when a histogram that had 10 bins now suddenly has 30 and becomes unreadable.

what if filtering your histogram will make your currently used bin sizes meaningless?Īssume you have a 10-bin histogram in steps of a 1,000 but now you filter down to a sub-segment of your data and boom, what looked great and made sense before now has an empty tail again? Or vice versa, you build your histogram on a filtered data subset and when you unfilter you find that your bin count has tripled because your previous bin-size of a 1,000 per bin just does not cover the entirety of your now unfiltered data set? Then, there are multiple solutions on how to enable grouping above a user-defined threshold to avoid situations where a few outliers leave you with an endless tail of empty bins. Thus, no wonder Tableau offers an out-of-the-box solution for this where you can either have Tableau auto-detect / suggest bin sizes or you can manually set them or your are even free to use a parameter to adjust your bins on the fly. Histograms are the go-to solution when you want to show distribution frequency.

0 kommentar(er)

0 kommentar(er)